최장환 (Jang Hwan Choi) 교수님의 연구 A Sequential and Intensive Weighted Language Modeling Scheme for Multi-Task Learning-Based Natural Language Understanding 가 Applied Sciences에 출판되었습니다. 축하드립니다!

Journal DOI: https://doi.org/10.3390/app11073095

Abstract

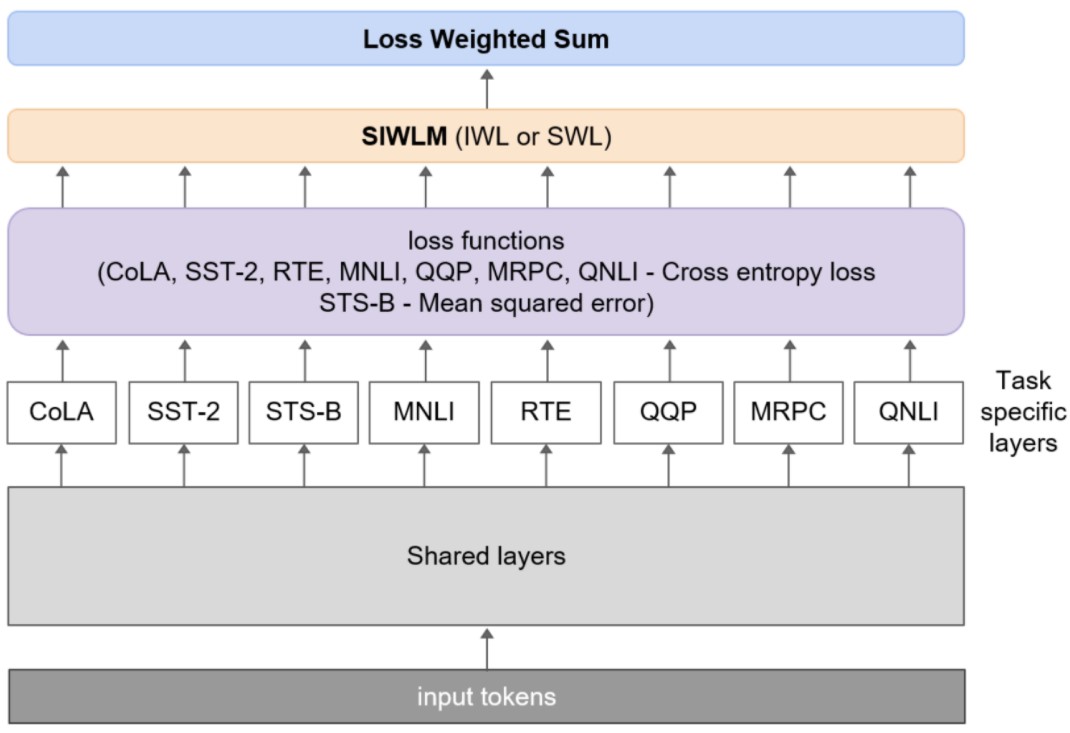

Multi-task learning (MTL) approaches are actively used for various natural language processing (NLP) tasks. The Multi-Task Deep Neural Network (MT-DNN) has contributed significantly to improving the performance of natural language understanding (NLU) tasks. However, one drawback is that confusion about the language representation of various tasks arises during the training of the MT-DNN model. Inspired by the internal-transfer weighting of MTL in medical imaging, we introduce a Sequential and Intensive Weighted Language Modeling (SIWLM) scheme. The SIWLM consists of two stages: (1) Sequential weighted learning (SWL), which trains a model to learn entire tasks sequentially and concentrically, and (2) Intensive weighted learning (IWL), which enables the model to focus on the central task. We apply this scheme to the MT-DNN model and call this model the MTDNN-SIWLM. Our model achieves higher performance than the existing reference algorithms on six out of the eight GLUE benchmark tasks. Moreover, our model outperforms MT-DNN by 0.77 on average on the overall task. Finally, we conducted a thorough empirical investigation to determine the optimal weight for each GLUE task.